The Three Protocols That Finally Make AI Agents Actually Work

And why 2025 is the year we stop building AI demos and start building AI systems

So here's the thing about AI agents in 2024 — they were impressive in demos but absolutely terrible in production.

You'd build an agent that could answer questions about your company docs, connect it to Slack, maybe throw in some database queries... and then spend 3 months debugging why it kept breaking every time someone updated an API or switched frameworks.

The problem? Every connection was custom. Every integration was brittle. Scaling was impossible.

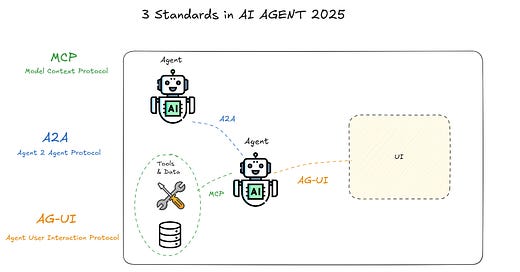

But 2025 is different. Three protocols have emerged that are finally solving this mess: MCP, A2A, and AG-UI.

Let me break down why these matter and how they work together.

The Core Problem: AI's Integration Nightmare

"Every new data source requires its own custom implementation, making truly connected systems difficult to scale." -- Anthropic, Model Context Protocol announcement

Think about your typical enterprise setup:

Agents built on different frameworks (LangChain, LangGraph, CrewAI)

Dozens of tools they need to access (Slack, databases, APIs, file systems)

Users expecting smooth, real-time interactions

Without standards, you're basically building a new bridge for every connection. It's the M×N problem — M models need custom connectors for N tools. That's M×N integrations to maintain.

Side note: This is exactly why the web works so well. HTTP became the universal protocol, so any browser can talk to any server. We needed the same thing for AI agents.

Protocol #1: MCP (Model Context Protocol)

The "USB-C of AI Apps"

What it does: MCP creates a universal standard for AI agents to connect with external tools and data sources.

Here's the breakthrough — instead of building custom connectors for every tool, you build once using MCP and it works everywhere.

The numbers are pretty wild:

OpenAI officially adopted MCP in March 2025 across ChatGPT, their SDK, and APIs

Over 5,000 active MCP servers listed in public directories

Companies like Block, Zed, Replit, and Sourcegraph have integrated it

Why this matters: Before MCP, if you wanted your AI to access Google Drive + Slack + your database, you'd write three different integrations. With MCP, you write one integration that follows the standard protocol.

The technical bit: MCP works as a lightweight layer between your LLM and external services. It uses JSON-RPC over HTTP (familiar web standards) and provides dynamic tool discovery — meaning agents can find and use tools without hardcoded interfaces.

Protocol #2: A2A (Agent-to-Agent Protocol)

Making Agents Actually Talk to Each Other

What it does: A2A is Google's open protocol that lets AI agents communicate and collaborate, regardless of who built them or what framework they use.

Remember when Google launched this in April 2025? They got backing from 50+ tech partners including Salesforce, SAP, MongoDB, even consulting firms like McKinsey and Deloitte. That's not just technical validation — that's enterprise buy-in.

The key innovation: "Agent Cards" — think digital business cards in JSON format. Each agent describes what it can do, what inputs it accepts, and how to interact with it.

Here's how it works:

Discovery: Agents advertise capabilities via Agent Cards

Negotiation: They figure out how to work together (text, forms, media)

Collaboration: They securely handle tasks without exposing internal logic

Why this is huge: Instead of isolated agents that work alone, you get collaborative agent teams. A research agent can hand off findings to an analysis agent, which passes results to a reporting agent — all automatically.

Did you know? Even Microsoft adopted A2A, integrating it into Azure AI Foundry and Copilot Studio. When Microsoft backs Google's protocol, you know it's solid.

Protocol #3: AG-UI (Agent-User Interaction Protocol)

Where Agents Meet Humans

What it does: AG-UI standardizes how AI agents connect to frontend applications and interact with users in real-time.

This one just launched in May 2025 from CopilotKit, and it solves a problem every AI developer has faced: How do you show users what your agent is actually doing?

The breakthrough: Instead of agents that work in black boxes, AG-UI streams real-time events:

Messages as they're generated

Tool calls as they happen

State changes as they occur

User inputs when needed

Technical details: It uses Server-Sent Events (SSE) over HTTP to stream JSON events between backend agents and frontend UIs. Simple, but powerful.

Why this changes everything: Remember Cursor? That's the perfect example of human-agent collaboration. You see what the AI is doing, you can guide it, interrupt it, work together in a shared workspace. AG-UI makes this pattern standard across all agent frameworks.

How These Three Work Together

Here's where it gets interesting — these protocols are complementary, not competitive:

MCP = Agent ↔ Tools

A2A = Agent ↔ Agent

AG-UI = Agent ↔ Human

Let me give you a practical example:

User asks an agent to "analyze our Q3 sales vs competitors"

AG-UI streams the request and shows real-time progress

Agent uses MCP to pull data from Salesforce, financial databases, web APIs

Agent uses A2A to delegate analysis to a specialized data science agent

Results stream back through AG-UI with charts, insights, and next steps

User can interrupt, ask follow-ups, or approve actions — all in real-time

The Enterprise Impact

"AI agents can ensure compliance with evolving regulations." -- From the enterprise AI strategy reports

This isn't just about better tech — it's about trust and governance. Enterprise AI needs:

Audit trails (who did what, when)

Policy enforcement (what agents can access)

Error handling (what happens when things break)

These three protocols provide the standardized interfaces needed for enterprise-grade governance. You can monitor MCP tool calls, track A2A agent collaborations, and log AG-UI user interactions — all through standard APIs.

What This Means for Developers

Before these protocols: Every agent project started from scratch. Custom WebSockets, ad-hoc JSON formats, brittle integrations.

After these protocols: Pick your agent framework (LangGraph, CrewAI, etc.), connect via MCP to your tools, coordinate with other agents via A2A, and stream to users via AG-UI. Standard components, reliable connections.

The adoption story:

Java frameworks (Spring AI, Quarkus) added MCP support

Microsoft integrated A2A into their developer tools

AG-UI works with LangGraph, CrewAI, Mastra out of the box

Summary

• MCP makes tool integration standard and reliable • A2A enables true multi-agent collaboration • AG-UI brings humans into the agent workflow • Together, they solve the M×N integration problem that's plagued AI development • 2025 is when AI agents finally got the "plumbing" they needed for enterprise deployment

The question isn't whether these protocols will succeed — the major players have already adopted them. The question is how quickly you'll start using them in your projects.

Are you experimenting with any of these protocols yet? What's your biggest challenge in building production AI agents? Let me know in the comments.